DWB #5: Foreign aid, what we learned about the Summit, and broken promises on animal welfare

Doing Westminster Better in October 2023

Big things have been happening in effective altruism, UK politics, and world news.

The fall of Sam Bankman-Fried continues. Michael Lewis has published his FTX expose, Going Infinite, and Bankman-Fried’s US trial has begun.

The Conservatives and Labour have each held their party conferences.1 Conference season is an opportunity for parties to come together to decide what they stand for, and to present themselves to the country as the government (or government-in-waiting) that we deserve. Clearly, the country no longer feels that the Conservatives deserve to be in government. There’s no election yet, but the Tories seem resigned to their defeat and Labour resolved to win.

Benjamin Netanyahu’s government is now at war with Hamas. You know this; you know what you think about this; you know what everybody on social media thinks about this. All I have to say is that war anywhere invokes in me a sense of moral vertigo, the feeling that says: suffering can be unimaginable in depth and in scale. Moral vertigo is how I feel witnessing Jews and Muslims, including children, across the world facing renewed bigotry. It’s how I feel witnessing Israelis murdered, mourning, and conscripted into war. And it’s how I feel witnessing Palestinians being killed to answer for the crimes of a terrorist group which does not answer to them – having governed without an election for seventeen years over a population which is now 50% under eighteen, and so having long since been drained of any democratic mandate. Moral vertigo is my motivation for trying to do good at scale: who could look at this volume of suffering and accept that it can go on? This newsletter examines the intersection of EA and UK politics, so much of the Israel/Palestine crisis is out of scope. I’ll be looking at a narrow slice of the problem, UK aid to Palestine, and leave the rest of the conversation to those who are better informed, whose hurt is more immediate and more raw, and whose lives are directly implicated in this disaster.

(This is a long newletter. If you’re on email, click through to read the whole thing. But feel free to pick and choose what interests you.)

Contents

Aid policy I. UK commits extra £10m to Palestinian aid

Aid policy II. Grain drain

AI policy I. Everything we learned about the AI Safety Summit this month

AI policy II. Sunak’s “legacy moment”?

AI policy III. Starmer isn’t biting on AI regulation

AI policy IV. AI on (and off) the international stage

AI policy V. Supercomputer for Scotland

AI policy VI. Zac Goldsmith worried about AI extinction

Animals policy I. Legislation for the animals (but not enough)

Animals policy II. Meat and international trade

Animals policy III. Alternative proteins being fast-tracked

Animals policy IV. Is Britain ready for ‘nanny state’ meals?

Aid policy I. UK commits extra £10m to Palestinian aid

The Prime Minister announced the additional humanitarian funding on Monday. This is a 37% uplift to UK funding to occupied Palestine this year (including another £10m announced last month, before the Israel-Hamas war began). The official announcement is here; the PM said:

An acute humanitarian crisis is unfolding, to which we must respond. We must support the Palestinian people – because they’re victims of Hamas too.

International Development Secretary Andrew Mitchell has said the UK will soon start moving “essential humanitarian supplies forward near the region”. He told the BBC that the government is committed to “doi[ing] whatever is necessary to play our part in meeting humanitarian need”, and has also said the UK has a “moral and practical responsibility” to supply aid.

Mitchell’s counterpart, Labour’s Lisa Nandy has written to him to urge moving more quickly, working with other UN states to better support UNRWA (the UN Relief and Works Agency for Palestine Refugees in the Near East, who have a shortfall of $104m), working with international partners to call for safe evacuation corridors (and make clear that civilians without access to evacuation routes aren’t combatants/targets), and calling on all combatants to respect UN facilities, humanitarian workers and journalists. See her Tweet here.

Since the Hamas terrorist attacks, it hasn’t been completely clear that the UK would recommit to providing aid to Palestine. Western governments, including the UK, affirmed their support for Israel, and critics worried that this could amount to ignoring Palestinian suffering. What happened in the EU is illustrative: after the EU affirmed support for Israel, Commissioner Olivér Várhelyi unilaterally announced the suspension of EU aid to Palestine. This decision was quickly reversed.2

Therefore, the funding announcement provides some reassurance in a difficult moment.

Critics will charge that neither party is doing enough. I expect a lukewarm reaction, for instance, to Defense Minister Grant Shapps declining to say whether a ground invasion of Gaza would be justified. In Labour, the criticism is coming from the inside: party leadership has written to council leaders to stem a potential flood of resignations protesting lacklustre support for Palestine. Keir Starmer is threading a needle here; there’s an antisemitic strain to the pro-Palestinian British left, and one of his key projects as leader has been to detoxify Labour and rebuild relations with the Jewish community.

I don’t know what a sufficient UK policy response to Israel/Palestine looks like, but I think we should consider foreign aid a necessary component of any UK policy response.

In humanitarian crises, effectiveness in charity (whether nation-state aid or individual giving) is of the utmost importance. But it’s also uniquely difficult in a time of crisis to know where to give most effectively. Countries giving aid are navigating the challenges of ensuring it supports the people who need it most, and doesn’t fund terrorist activity. Individual donors are trying to maximise the good they can do absent strong evidence. I found that this 2022 Atlantic article, about effective altruism in the Ukraine war, really resonated with these challenges.

Aid policy II. Grain drain

Deputy Prime Minister Oliver Dowden announced a £3 million contribution to the UN World Food Programme after saying that “the hungry and malnourished people of the developing world are Russia’s victims too”. He was addressing the UN Security Council in New York.

Ukraine has been one of the world’s largest exporters of grain. The Russian invasion has restricted Ukraine’s ability to sell to the developing world, heightening hunger and the risk of famine and highlighting the vulnerability of food insecurity.

This has also led to an oversupply of Ukrainian grain in eastern and central Europe, especially after an EU import restriction was lifted in September. The consequent economic pain for local farmers was driving tensions for Ukraine and Poland (see here and here), but I’m unclear as to whether/how far the Polish election will reset the relationship with Ukraine.

The UK will be hosting an international summit on grain policy in December. (Easy to overlook with so much AI Safety Summit news around these parts.)

Also at the UN: Andrew Mitchell announced £295 million in research funding to tackle diseases.

For World Food Day: the UK announced a partnership with big business to address world hunger. Details here.

AI policy I. Everything we learned about the AI Safety Summit this month

There are five key objectives

a shared understanding of the risks posed by frontier AI and the need for action

forward process for international collaboration on frontier AI safety, including how best to support national and international frameworks

appropriate measures which individual organisations should take to increase frontier AI safety

areas for potential collaboration on AI safety research, including evaluating model capabilities and the development of new standards to support governance

showcase how ensuring the safe development of AI will enable AI to be used for good globally.

These come from the recently published ‘Introduction to the AI Safety Summit’.

This document also has some detail on that first objective. What are the risks posed by AI? The government recognise a wide range of harms: “deliberate or accidental; caused to individuals, groups, organisations, nations or globally; and of many types, including but not limited to physical, psychological, or economic harms”.

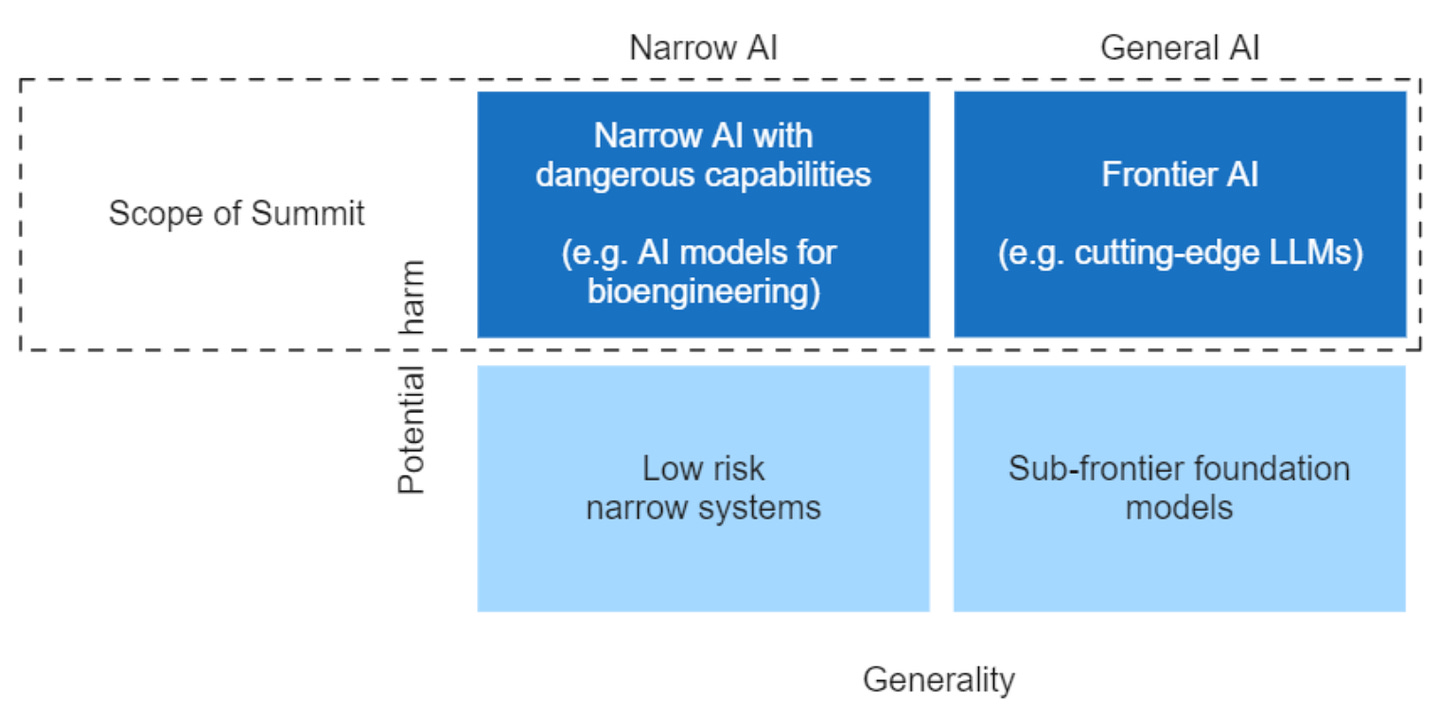

But the AI Safety Summit will focus on frontier models (“highly capable general-purpose AI models that can perform a wide variety of tasks and match or exceed the capabilities present in today’s most advanced models”) and on narrow AI systems which could be deployed dangerously (e.g. “AI models for bioengineering”).

Here’s a helpful diagram of which models are and aren’t in scope:

Zooming in on a subset of AI systems means we’re also zooming in on a subset of AI risks. The government are worried about misuse risks (“where a bad actor is aided by new AI

capabilities in biological or cyber-attacks, development of dangerous technologies, or critical system interference”) and loss of control risks (“that could emerge from advanced systems that we would seek to be aligned with our values and intentions”). Loss of control strikes me as double-valenced: one side of the risk is that humans lose control of the AIs, and another is that humanity loses control over our future to AI.

Anybody who has been following AI safety discourse will have recognised by now that the Summit is about ‘existential risks’ instead of ‘AI ethics’ concerns.

But the government, in acknowledging that “AI safety does not currently have a universally agreed definition”, is also trying not to alienate those worried about other important issues, like algorithmic bias. To that end, the government will also sponsor ‘Road to the Summit’ events, “wider engagement [to] ensure that other important issues are also discussed”.

There are ~100 attendees. (Elon Musk is a maybe)

To promote the Summit, Ian Hogarth (Chair of the Frontier Model Taskforce) and Michelle Donelan (DSIT Secretary) have done Q&As on Twitter and LinkedIn respectively. One of the interesting tidbits that Hogarth dropped: there’ll be about 100 people in attendance at the Summit.

Let me know if you’re on the guest list!

One familiar face who might be at Bletchley: Elon Musk “very, very welcome” at the Summit, says Chancellor Jeremy Hunt. He says that he hasn’t spoken to Musk, but that “I think we’re going to get most of them [tech leaders] coming.” (H/t Bloomberg).

The Summit hosts will have to strike a balance between finding subject matter experts and resisting the impression of regulatory capture. Some events, like Chuck Schumer’s AI briefing for US Senators, have already been accused of being regulatory capture in action. Some AI governance questions, like whether frontier models should be (allowed to be) open-sourced, resolve differently if you prioritise mitigating existential risk or regulating for a level playing field between Big Tech and smaller companies. AI safety advocates should be careful about this.

I’ve written a fair amount about the ‘will they/won’t they’ of an invitation for China. It’s seemed pretty certain for a while now that China will be at the Summit, but The Telegraph are now reporting that they may only stay for day one (paywalled).

What will they be missing? The Telegrapb report that day two is about “security”. The recently released Summit timetable says day 2 will “convene a small group of governments, companies and experts to further the discussion on what steps can be taken to address the risks”. (The day 1 schedule is much more explicit: check out the timetable here).

There won’t be a new intergovernmental organisation

Experts have been pushing for a new intergovernmental organisation to regulate international AI safety. There’s been discussion, for example, about a CERN or an IAEA for AI (see here for a proposal for an IPCC for AI, written by Mustafa Suleyman and Eric Schmidt). It’s now been confirmed that establishing such an organisation isn’t on the agenda at the forthcoming AI Safety Summit.

Here’s Matt Clifford on Twitter:

This will be a disappointment to the AI safety community. But it’s early doors, and it was unrealistic to expect a new international organisation at this stage. The proposal is being taken seriously, with rumours that both Italy and China are looking to lead the charge to establish such a group.

The draft communique has leaked

A communique is a document signed by diplomatic parties. Usually more platitude than policy, they’re important nonetheless: a public statement of intention at the highest level. The Telegraph report (paywalled) that the two-page draft “does not propose new laws or a global regulator”, but does:

emphasise the catastrophic risks of frontier models

commit to developing an “international science network” to research AI safety

call on AI labs to invest in safety

pledge to “intensify and sustain our cooperation to identify, understand and as appropriate act”.

Here’s part of the leaked communique:

“The most significant of these risks arise from potential intentional misuse or issues of control, where AI systems may seek to increase their own influence and reduce human control, and these issues are in part because those capabilities are not fully understood.

“We are especially concerned by such risks in domains such as cybersecurity and biotechnology. There is potential for significant, even catastrophic, harm, either deliberate or unintentional, stemming from the most dangerous capabilities of these AI models.

“Given the rapid and uncertain rate of change of AI, and in the context of the acceleration of investment in technology, we affirm that deepening our understanding of these potential risks and of actions to address them is especially urgent.”

The Telegraph also note that some of the most extreme language might be diluted, as it “does not reflect the Government’s position”, and that a statement that “involve a broad range of partners as appropriate” is a nod to China.

AI policy II. Sunak’s “legacy moment”?

The Telegraph have published two pieces offering insight into how seriously Rishi Sunak takes AI risks.

Sunak fears “Britain has one year to prevent AI running out of control”. According to Whitehall sources, the PM believes there is only a “small window” of opportunity here.

I take seriously the thrust of this piece – i.e. that Sunak is sincerely worried. But it’s worth flagging that those Whitehall sources almost certainly don’t know Sunak. They know something about his mindset because, as civil servants, they’re cogs in the great machinery of government which, from some distance, Sunak steers.

There’s “an increasingly apocalyptic tone in Westminster” and Sunak sees AI safety as “his legacy moment”. I feel like this other article (by the same journalist, James Titcomb), gets closer to Sunak’s psychology. Titcomb reports that Sunak is “deeply involved in the AI debate.”

“He’s zeroed in on it as his legacy moment. This is his climate change,” says one former government adviser.

I’m glad we have a PM who takes AI safety so seriously. But I would remind him that climate change should also be his climate change.

Titcomb is also reporting here on links between AI safety experts and EA, drawing on Laurie Clark’s reporting for Politico last month. I’m highlighting this just because I’m pleased to see somebody standing up for EA:

One AI researcher defends the associations, saying that until this year effective altruists were the only ones thinking about the subject. “Now people are realising it’s an actual risk but you’ve got these guys in EA who were thinking about it for the last 10 years.”

AI policy III. Starmer isn’t biting on AI regulation

Keir Starmer isn’t so keen to carry on with Rishi Sunak’s legacy projects.

Politico have an interesting report about how the Labour Party sees AI. Vincent Manancourt, Tom Bristow and Laurie Clarke write that Starmer is keen to cut red tape on AI, and sees AI as a way to boost economic growth and public services to create a “decade of national renewal”. This is consistent with Starmer’s vision for Labour: the party leadership is trying to sell themselves as good for business, and as technocrats who are ready to fix Britain.

It’s not clear if Starmer has a take on existential risk from AI. In theory, his pro-AI proposals could involve jettisoning Sunak’s work on AI safety. (Quite the role reversal, to have the Tory leader looking more pro-regulation than Labour’s leader!) But that could be painting with too broad a brush: there’s no reason why using AI to (e.g.) supercharge the NHS can’t come side-by-side with investing in AI safety research.

Starmer’s attitude to AI matters. This is the man everybody’s saying will be the next Prime Minister.

I’ve written before about unions’ sceptical approach to AI, which they (very reasonably) fear will be taking jobs and firing by algorithm. Politico’s report acknowledges this, discussing a motion on AI in the workplace which was put forward by two trade unions and passed by party members at the Labour conference. Per LabourList, that motion called on Labour to:

Develop a “comprehensive package of legislative, regulatory and workplace protections” to ensure the “positive potential of technology is realised for all”

Ensure a legal duty on employers to consult trade unions on the introduction of “invasive or automated AI technologies in the workplace”

Work with trade unions to investigate and campaign against “unscrupulous” use of technology in the workplace.

Perhaps it’ll be the unions who save us from superintelligence?

AI policy IV. AI on (and off) the international stage

ON: Deputy Prime Minister Oliver Dowden warned the UN General Assembly that “global regulation is falling behind current advances” in AI. (H/t The Independent)

I’ve written about why Dowden matters before. Brits probably don’t think about their Deputy PM as much as the Americans think about the Vice President. But Dowden, whose role involves responsibility for long-term resilience including AI and pandemic preparedness, really matters (especially if you’re a longtermist).

What’s interesting about Dowden’s UN speech is that he said:

Because tech companies and non-state actors often have country-sized influence and prominence in AI, this challenge requires a new form of multilateralism.

I don’t know much about geopolitics and ‘Big Tech companies are geopolitical actors now’ isn’t a particularly hot take.3 But I think it’s worth watching this space: it’s not inconceivable that AGI labs become as powerful as nation states and an international response to AI should take that seriously.

OFF: Sunak resisted Spanish demands to prioritise AI at Granada. The European Political Community (larger than the EU and still including the UK) met earlier this month. The Telegraph reported (paywalled) that Spain, the summit host country, wanted to focus on AI but Sunak pushed hard instead for a focus on migration. Apparently, the French had to mediate.

This story seems at odds with Sunak’s clear-sighted focus on AI as an existential threat. But Sunak’s government has taken a hardline, ‘culture wars’ stance against migration:

One of his five key pledges was to stop the boats

His party is dancing with the radical prospect of breaking away from the European Convention on Human Rights because the European Court of Human Rights is blocking their plan to deport migrants to Rwanda

His Home Secretary was widely criticised as racist for a conference speech which was compared to Enoch Powell’s ‘rivers of blood’ rhetoric.

It’s entirely possible that Sunak, who was supported by Italy’s far-right Giorgia Meloni in the push to put migration at the top of the Granada agenda, was prioritising politics-as-usual over AI safety. That seems like the most reasonable explanation to me, albeit very disappointing to those concerned about AI.

AI policy V. Supercomputer for Scotland

Last month, I reported about a supercomputer coming for Bristol.

Now, the UK government has announced a supercomputer is coming to the University of Edinburgh too. The “exascale supercomputer” will be able to perform a billion calculations each second, 50 times faster than any existing computer in the UK, and will be funded by UK Research and Innovation (UKRI). Installation is due to begin in 2025; it’s unclear when the machine will be operational.

I’m still a little unclear (as I think all non-experts are!) on the exact implications of supercomputers, generally and for AI specifically. Donelan says:

If we want the UK to remain a global leader in scientific discovery and technological innovation, we need to power up the systems that make those breakthroughs possible.

This new exascale computer in Edinburgh will provide British researchers with an ultra-fast, versatile resource to support pioneering work into AI safety, life-saving drugs, and clean low-carbon energy. It is part of our £900m investment in uplifting the UK’s computing capacity, helping us drive stronger economic growth, create the high-skilled jobs of the future and unlock bold new discoveries that improve people's lives.

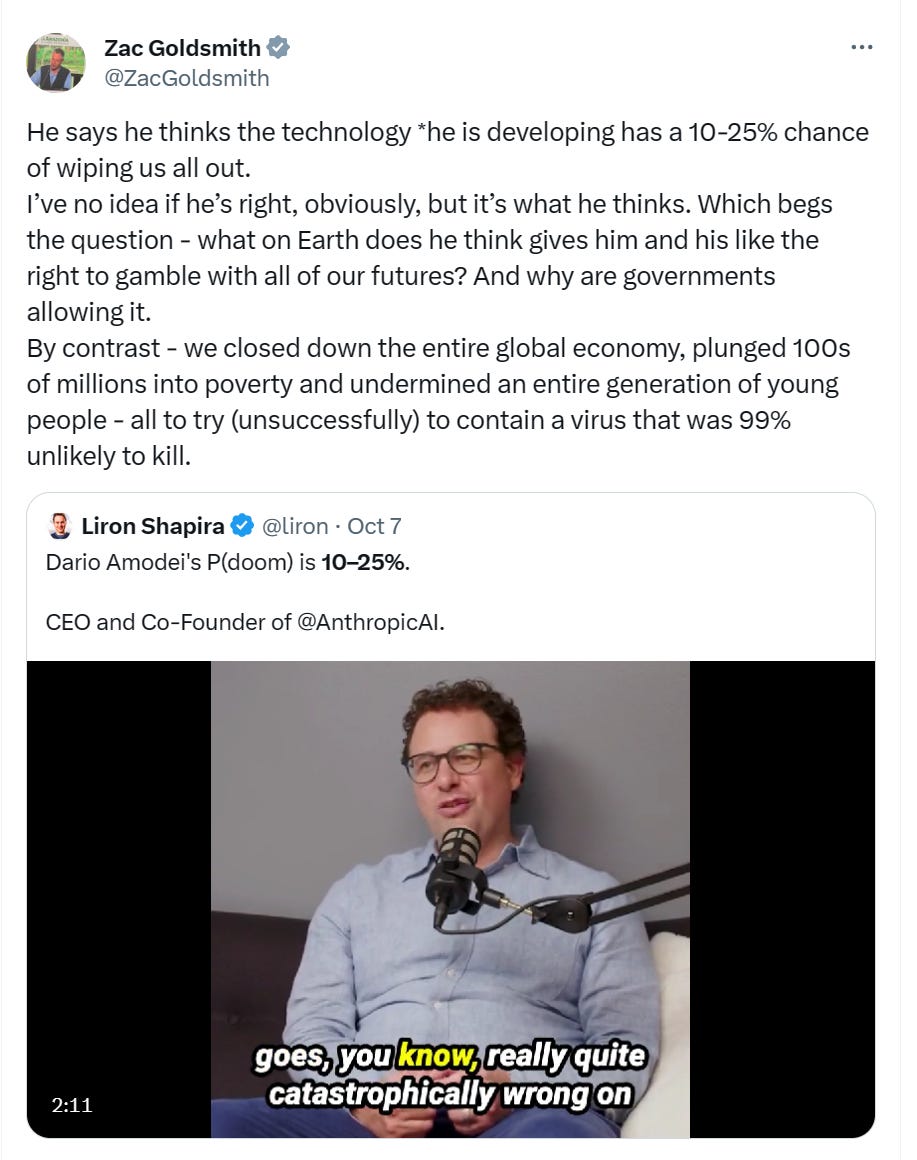

AI policy VI. Zac Goldsmith worried about AI extinction

I don’t have much to add. Goldsmith sits in the House of Lords, was formerly a Conservative Environment Minister, and has pushed the Tories, including Sunak, to do better on climate change. I don’t think the comparison to COVID is helpful.

Animals policy I. Legislation for the animals (but not enough)

Mea culpa: I missed some important news in my last newsletter! The Animals Abroad Bill officially passed into law on 18 September. The new law involves “a ban on the advertising and sale of specific unethical activities abroad where animals are kept in captivity or confinement, subjected to cruel and brutal training methods, forced to take selfies or are ridden, drugged and de-clawed.”

Animals exploited for tourism are salient, sympathetic icons of what’s broken in our relationship with our fellow creatures. I’m glad we’re making progress here – but it’s important to remember that the largest source of animal suffering is factory farming. Animals suffer at home, too.

The bill was introduced by Conservative MP Angela Richardson and Conservative Lord Black of Brentwood. The Conservative Party deserve some applause for their work on animal welfare. But they’ve failed to live up to their manifesto promises. Here’s The Independent, reporting on fact-finding from the RSCPA:

The government has backtracked on 15 promises to improve animal welfare, including some from its own election manifesto, new analysis claims.

The broken pledges include a ban on live exports, a crackdown on puppy smuggling, curbing sheep worrying and outlawing foie gras imports.

Commitments to write high animal-welfare standards into post-Brexit trade deals and to consult on ending cages for farm animals have also been dropped, according to the RSPCA.

Fortunately, animal advocates have been at both party conferences pushing MPs to say: Animals Matter. Here, for example, is Eddie Izzard at the Labour conference:

The Animals Matter campaigning was done by the Humane Society International, RSPCA, Compassion in World Farming, and Four Paws.

Since then, CIWF have also been in Parliament for an event hosted by former Defra Sec George Eustice MP where, in collaboration with other animal welfare groups, they encouraged MPs to support much needed animal welfare legislation. This includes legislation to ban live exports, ban the import of puppies and kittens, and make dog abduction a specific offense. Thanks to all MPs who turned out to hear about these important matters. Read CIWF’s report here.

Also: snare and glue traps banned in Wales. “The ban is part of Wales’ first Agriculture Act led by Rural Affairs Minister Lesley Griffiths who expressed her pride in the fact that Wales is the first of the UK nations to introduce such a move.” (h/t ITV News)

Animals policy II: Meat and international trade

Politico report that meat might be a stumbling block for a UK-US trade deal. Defra has been resisting pressure from the US to permit them to export chlorinated chicken and hormone-injected beef to the UK. The Department for Business and Trade are pushing back on Defra, and the ball is with the PM. “It is now with the PM [Rishi Sunak] to make a decision on whether to include it or not,” says an anonymous government official.

Sunak and Biden want to get a trade deal done before their respective 2024 elections.

I don’t think most of the political pressure here is about animal welfare; for years, ‘chlorinated chicken’ has been symbolic of the inferior goods Britain might expect from its post-Brexit trade deals. But it’s worth noting that there could be animal welfare implications, and appreciating that any opportunity to affect international food policy is a high leverage opportunity for animals.

Meanwhile, Jacob Rees-Mogg has been in hot water with farmers for backing the import of Australian hormone-injected beef. Yes: hormone-injected beef, just like the Americans want to sell us.

National Farmers Union President Minette Batters hit out at Rees-Mogg, Tweeting:

Animals policy III. Alternative proteins being fast-tracked

The Telegraph report that the UK is to fast-track approval for lab-grown meat, and sign a deal with Israel to boost collaboration on alternative proteins. Here’s the source; paywalled.

I have previously written that the Food Standard Agency (FSA) could take up to 18 months to review novel foods, meaning that lab-grown meat could be sold in the UK from 2025. But now the FSA are “considering future changes” to lab-grown meat’s approval process to “remove unnecessary burdens on business”. It’s not clear when lab-grown meat will be on sale in the UK, but this is unambiguously good news.

Although reported in the same article, the collaboration with Israel is really separate news. “The UK Government is poised to sign a bilateral agreement to boost collaboration on lab-grown meat with Israel, which is at the forefront of the movement”. Israel is indeed a world leader in alternative proteins; Aleph Farms is an Israeli company, which earlier this year submitted the first FSA application to sell lab-grown meat in the UK. I’m unclear if, or how, the war with Hamas will impact the Israeli alternative proteins industry.

Here’s a quote from Science Minister George Freeman:

There are some good signs that in the DNA of homo sapiens there’s a need for meat and we are not going to be able to meet that through traditional husbandry with nine billion hungry mouths to feed by 2050 - we’re going to have to generate novel sources. If we don’t quickly generate ways to develop very low cost protein, we’re going to see huge geographical instability.

He’s, frankly, wrong that we need meat. (I’m thriving on my vegan diet!) But he’s completely right that current animal agriculture isn’t sustainable, especially with projected increases in population. We need food system transformation to overcome climate change, food insecurity and mass animal suffering – fast-tracking alternative proteins is a good step. If you want to tell UK politicians to support the alternative proteins industry, consider signing this open letter to the Prime Minister. It reads, in part:

“We call on you to make a £1 billion ‘moonshot’ investment between now and 2030 to fuel a rapid protein transition and help make the UK a world leader in the crucial technology of sustainable proteins.”

Animals policy IV. Is Britain ready for ‘nanny state’ meals?

Some interesting polling has recently come to light about Britain’s relationship with “nanny state” policy. I don’t want to read too much into this, but if you squint, you might see early flickers of support for a more interventionist food policy. This is controversial, both because important liberal principles suggest food choice be left to the individual and because government intervention on food could backfire easily, but it might become necessary for the government to encourage people to eat less meat – due to the meat industry’s negative impacts on animal welfare, the climate, and public health.

The polls I’m talking about are:

A Food, Farming and Countryside Commission report which found 75% agreed the government is “not doing enough” to ensure everybody can afford healthy food

The British Social Attitudes survey, which found both Conservative and Labour voters see the government as having more responsibility than a few years ago. There’s good analysis at Sam Freedman’s Substack here and Mark Pack’s Substack here.

Related: in response to a plan from Sunak to ban smoking for 14 year olds, a former “food tsar” has called for public health strategy to focus on fast food and obesity. Soon, UK public policy will have to confront how unsustainable meat eating is hurting our health – just today, the news is reporting on a new study showing a link between red meat and diabetes.

But the Conservative Party definitely aren’t ready to talk about meat reduction policy: at the Tory conference, Net Zero Minister Claire Coutinho extended the party’s previous mistruth that they were blocking plans for a meat tax into a full-blown lie that Labour actively supported taxing meat. She was grilled by Sky News’s Sophy Ridge; I recommend checking out the video, but here’s a write-up too.

Thanks for reading…

You may also be interested in ….

This BBC report: Schools and NHS caterers ‘must stop’ antibiotic overuse. Antibiotic resistance is linked with industrial animal agriculture, and poses a huge threat to public health. I’m heartened to see a new Charity Entrepreneurship-incubated charity working on this vital problem!

This TIME report: Prime Minister Rishi Sunak Has Lost Control of Britain’s Conservative Party. I agree pretty much entirely with the author’s take. I think the focus on laissez-faire, Trussian economics vs far-right, Braverman-ian ‘culture wars’ rhetoric is an interesting lens to look at the future of the Tory party – but maybe a false dichotomy.

The EU recently dropped important animal welfare pledges, despite huge public support. This Vox piece is an easy explainer of the controversy.

Emergent capabilities: This month, AI translated text on an ancient scroll burnt in the Vesuvius eruption.

London vegan restaurant rec: All Nations Vegan House in Dalston served me some delicious Carribean food yesterday!

Vegan of the month: I’d like to spotlight Joanne Cameron, subject of this interesting New Yorker profile.

EAG for political geeks.

A brief EU politics digression: it wasn’t even clear that Várhelyi was allowed to make this decision unilaterally, and the reversal came from an emergency meeting of EU foreign ministers. EU leadership is now divided over Israel/Palestine, mapping onto and exacerbating existing tensions about Ursula von der Leyen (who visited Israel shortly after the Hamas attacks) overstepping on foreign policy. See here for more.

Ronan Farrow’s New Yorker piece on Elon Musk is an extremely insightful version of this take from a really intrepid investigative journalist.