Sunak, Labour, CMA: What they're thinking about AI companies

(3/3) Doing Westminster Better after the AI Safety Summit

This is Doing Westminster Better, my newsletter about what UK politics means for global priorities. I’d promised an AI news update a few weeks ago, looking at whether the Prime Minister’s bite was living up to his bark after hosting the AI Safety Summit, but felt we were best off waiting until the dust cleared on the OpenAI drama. Now that things have settled down, at least momentarily, let’s check in on politicians’ feelings about AI systems – and especially their feelings about the companies building them.

The AI Safety Summit is already ancient history after the “1) What”-ness of the OpenAI drama and the Gemini drop. But it’s worth remembering that, in important ways, Rishi Sunak’s summit was a clear success:

Countries including the US and China signed the Bletchley Declaration, recognising that “AI should be designed, developed, deployed, and used, in a manner that is safe, in such a way as to be human-centric, trustworthy and responsible.”1

AI labs made voluntary responsible scaling commitments

The UK has established its own AI Safety Institute (AISI)2

Yoshua Bengio will chair an IPCC-style report on AI risk

In about six months, the world will zoom into a sequel summit in South Korea and, about six months later again, will reassemble in-person in France.

What’s undeniable is that AI risks, including the threat of human extinction from superintelligence, are now a priority of governments across the world. So it’s surprising that Sunak’s own government is now stalling on implementing necessary and popular AI safety legislation.

“The UK had an ambition of becoming an international standard-setter in AI governance,” said Greg Clark, chair of the House of Commons science, innovation and technology select committee. “But without legislation [ . . . ] to give expression to its preferred regulator-led approach, it will most likely fall behind both the US and the EU.” (from the FT; paywalled)

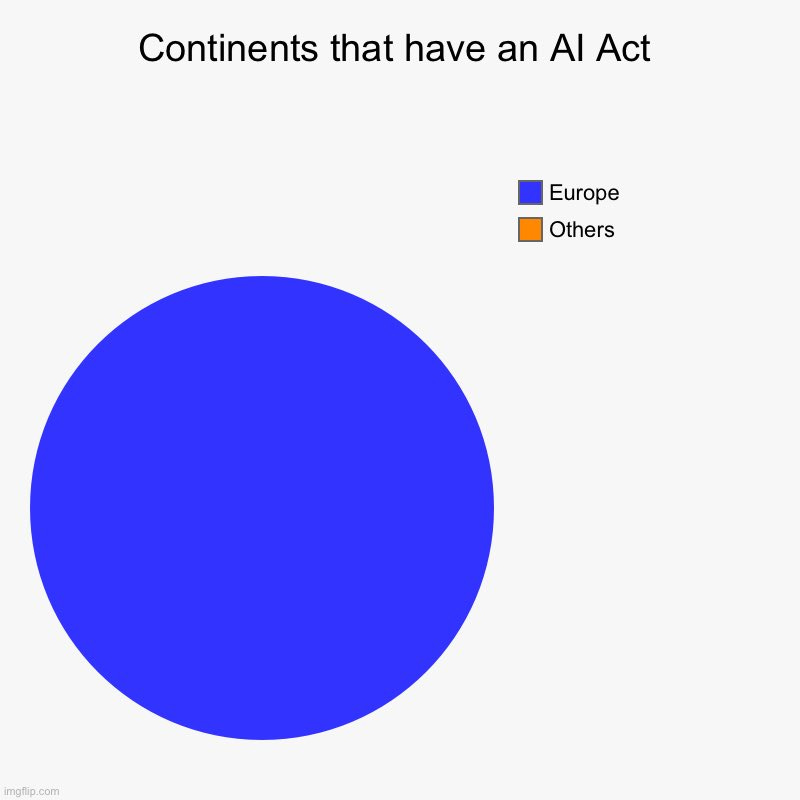

We’re certainly lagging behind the EU, who have secured an AI Act after extremely lengthy negotiations. What gives?

Where’s the policy, Mr Sunak?

There are a couple of narratives that have emerged as journalists try to explain the empty space where we might expect Sunak’s AI policy to be. I think it’s worthwhile disentangling them.

Narrative #1: The UK bit off more than we could chew. An America-sized problem demands an American solution.

This story was the first on the scene. Just before Sunak’s summit, Joe Biden one-upped the PM with a sweeping Executive Order on AI, including the creation of America’s own AI safety research institute. At the signing ceremony, shortly before attending the UK’s summit, Vice President Kamala Harris said:

“Let us be clear: when it comes to AI, America is a global leader. It is American companies that lead the world in AI innovation. It is America that can catalyse global action and build global consensus in a way that no other country can.”

Queue opinion pieces like John Thornhill in the FT: “Step aside world, the US wants to write the AI rules”. But Biden acting on AI doesn’t justify or explain Sunak failing to act on AI; it merely highlights Sunak’s inaction by contrast. Global coordination on AI doesn’t mean waiting for the US to tell us what to do; it means every country stepping up to the plate.

Narrative #2: The UK is striving for evidence-based, Really Good legislation, and that takes time.

This is the party line. Here’s Michelle Donelan Secretary of State for Science, Innovation and Technology:

“I don’t think it’s helpful to set arbitrary deadlines on regulation [...] there needs to be an empirical approach. [...] Are we ruling out legislation? Absolutely not. We’re trying to do things that are faster than legislation.” (source)

And here’s Jonathan Camrose, minister for AI and intellectual property, who (to the best of my knowledge) was the first member of government to publicly recognise AI as an existential risk:

“I would never criticise any other nation’s act on this,” said Camrose. “But there is always a risk of premature regulation.” In rushing to introduce industry controls, “you are not actually making anybody as safe as it sounds”, he said. “You are stifling innovation, and innovation is a very very important part of the AI equation”. (source)

(I’d be curious to hear from AI governance experts whether they feel that the EU AI Act, in coming so quickly, has missed opportunities)

Narrative #3: The UK is settling for voluntary agreements, and these don’t go far enough.

This is the opposition line.

At the time of the Summit, I wrote a piece asking whether the UK would continue to lead on AI safety policy under a Labour government. (You can read that here). To their credit, Labour have been much clearer about what their AI policy will entail since the Summit, and I’m more confident in their capacity to lead on this.

I recommend reading these two pieces to get a sense of Labour’s thinking:

Labour vows to force firms developing powerful AI to meet requirements (The Independent)

AI would have saved my mother from lung cancer, says Labour MP Peter Kyle (The Telegraph)

The latter includes a few paragraphs from Kyle, who is the Shadow Secretary for Science, Innovation and Technology. It’s worthwhile hearing his personal reflections on medical technology and AI safety; in particular, his writing is a reminder that people in the UK and across the world will benefit in really tangible, important, human ways from advances in AI.

The key policy commitment on AI safety is to make pre-deployment safety testing mandatory for AI companies. Here’s Kyle in The Telegraph:

The UK is at risk of being left behind by relying on the goodwill of the companies themselves. A voluntary system is not something you can rely on when the risks are so massive.

Labour would put the country’s security first and require companies developing the most powerful AI to carry out and report safety tests as they move forward.

Many of the labs are starting to do this as good practice, but making it a requirement will reassure people that the most powerful AI should be developed here, shaped by our democratic values.

The devil is where else but in the details: we still have to see which AI models will be subject to this testing, and what the testing will look like. But it seems robustly good to mandate safety testing rather than leave it up to the AI labs. Before the Summit, MPs, civil servants and experts were saying ‘don’t let the companies mark their own homework’; making testing mandatory is what that means in practice.

So how should we feel about Starmerite AI safety?

At the moment, it looks like Keir Starmer is willing to do everything Rishi Sunak is willing to do, and a little extra. Sunak’s voluntary responsible scaling policies would be made mandatory under a Labour government.

But it’s still hard to say Starmer really gets the importance of AI safety in the way Sunak seems to. I don’t have any insider info, and maybe I’m underestimating the Labour leader. That said, Starmer’s approach seems to be motivated not by a concern for existential security, but by the importance of keeping up with the neighbours,3 and an ideological uneasiness with Big Tech.

This leaves one important area where Labour are still behind the Conservatives on AI safety. Suank has acknowledged the widely (but non-univerally) held expert opinion that advanced AI poses an existential threat to humanity. So have government reports and his AI Minister. But as far as I’m aware, nobody from Labour has publicly acknowledged these stakes.

Perhaps this is unimportant, a mere symbolic gesture. But the Labour Party has a proud history of leading Britain’s political reckoning with existential threats. In 1960, the conference voted for unilateral nuclear disarmament, recognising that a world without nuclear weapons would be a better, safer world.4 I hope that the party soon recognises the existential threat of advanced AI and the UK achieves a bipartisan consensus here. But action is more important than rhetoric and, while it’s early days, Labour seem to be supporting policies to keep us safe from catastrophically dangerous Frontier AI.

Competition and Markets Authority to examine OpenAI–Microsoft relationship

Much of the difference between Starmer and Sunak on AI turns on their attitudes to Big Tech companies. Starmer’s is a business- and innovation-friendly Labour, so the distinctions are fine, but I think it’s this ideological difference that leads to Sunak obsequiously interviewing Elon Musk whereas Kyle promises, if not a crackdown, then a mildly firmer hand on AI companies.

So it seems important to understand how the UK thinks about AI companies – and doubly so after the OpenAI corporate governance fiasco.

Now, the Competition and Markets Authority (CMA) has opened an investigation into the relationship between OpenAI and Microsoft. (FT link; paywalled) The CMA have asked relevant parties, including competitors, whether “the partnership between Microsoft and OpenAI, including recent developments, has resulted in a relevant merger situation”. We’re all curious about this!

Readers are probably aware that OpenAI has a complex relationship with Microsoft; OpenAI is a non-profit which owns(?) a for-profit, in which Microsoft has a large profit-capped investment. But the Sam Altman boardroom drama suggests that the formal mechanisms of power are less relevant than the de facto controlling forces of Altman’s star power and Microsoft’s billions. The best explanation comes, predictably, from Bloomberg’s Matt Levine (paywalled).

Whether or not governments across the world recognise this a merger in all but name matters for whether we should still perceive OpenAI as a non-profit working in humanity’s best interests, or as a standard profit-chasing company captured by the interests of capital. One seems much riskier than the other.

For what it’s worth, the German CMA equivalent recently declared that Microsoft’s relationship with OpenAI didn’t count as a merger; and the US equivalent – the Federal Trade Commission (FTC) – have also opened an investigation. FTC Chairperson Lina Khan has a p(doom) of 15%.

How are the public feeling about AI?

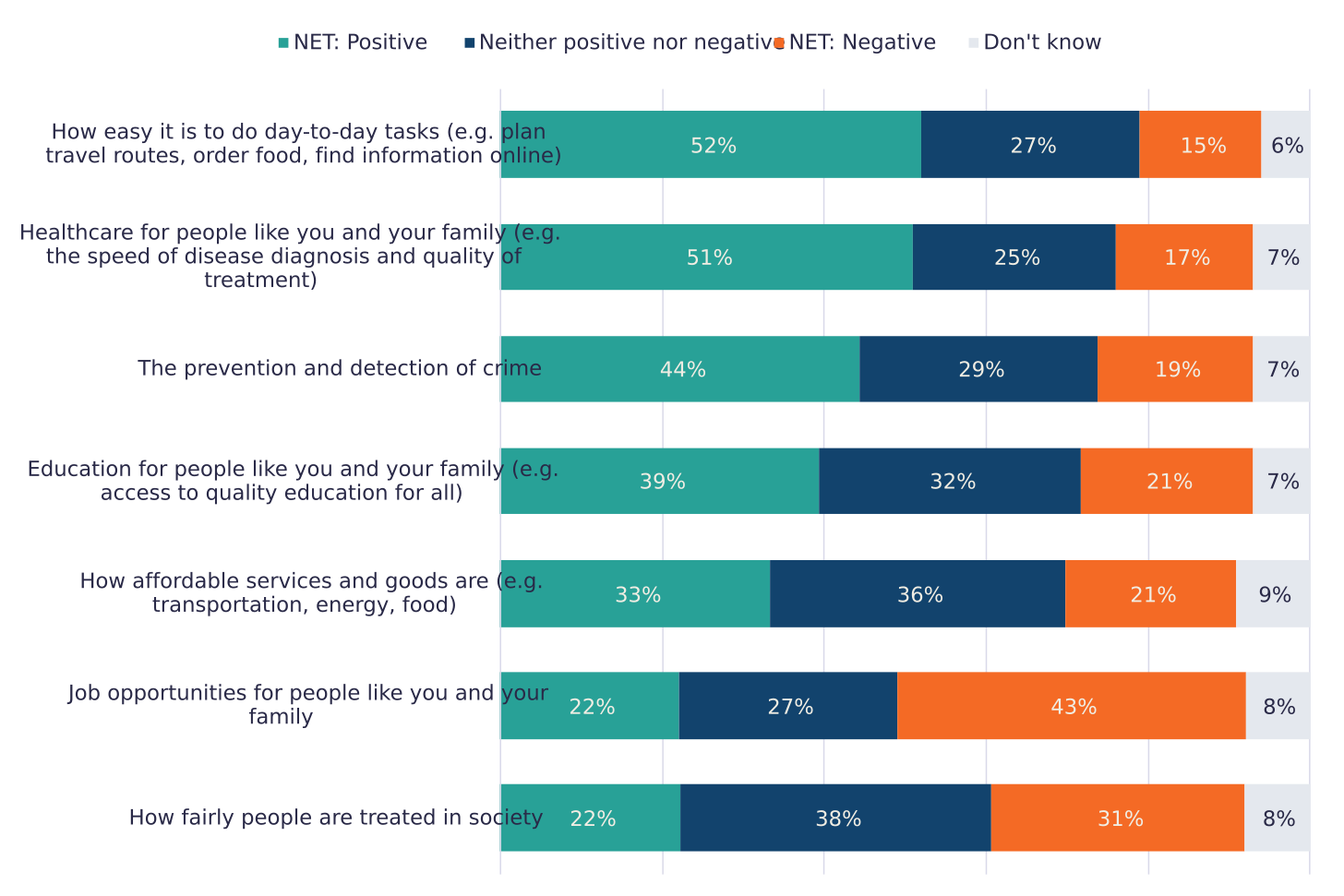

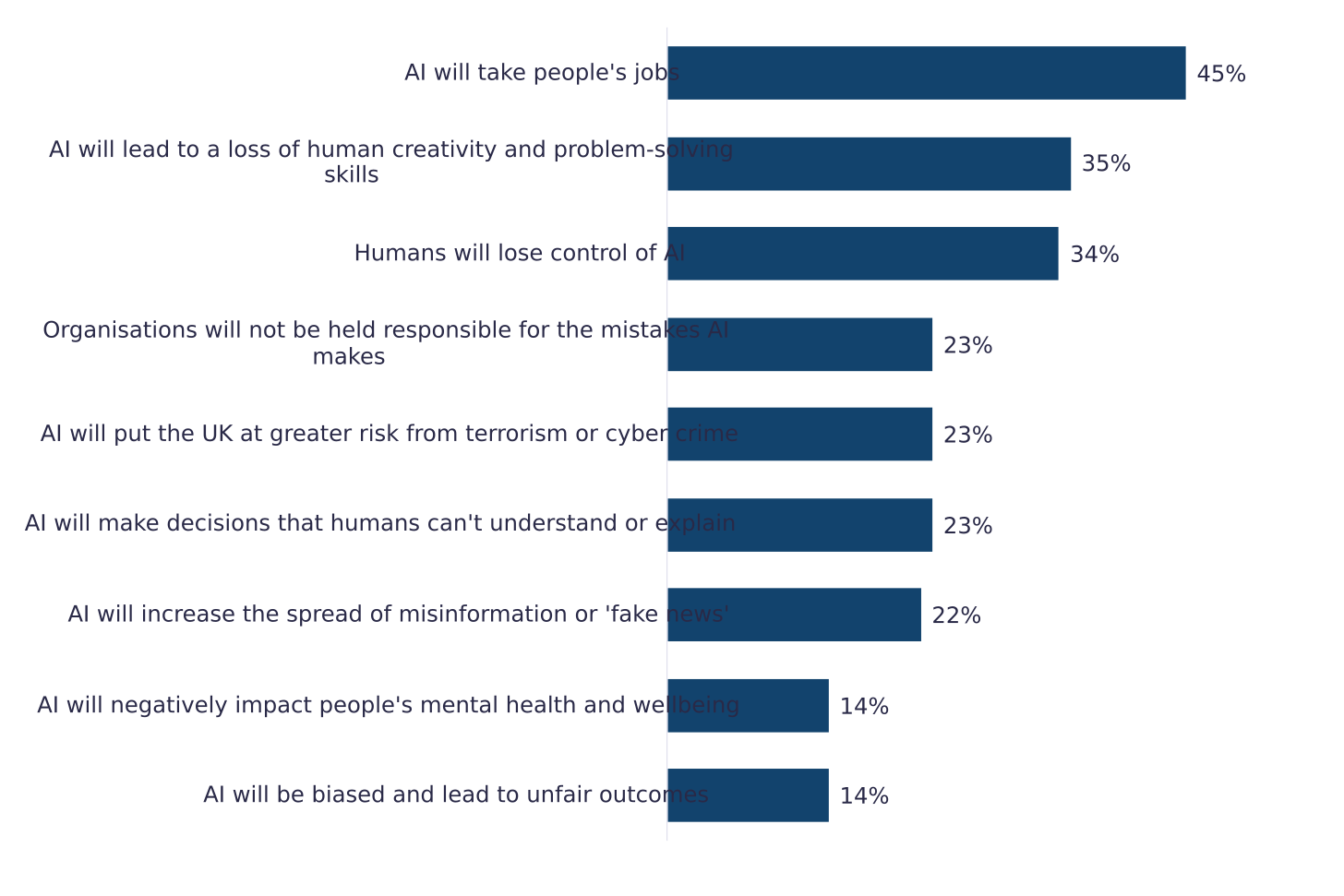

We’ve seen in poll after poll that the general public are aligned with experts worries about extreme AI risks, including loss of control. Now, we have new information on public attitudes to AI from DSIT’s Centre for Data Ethics and Innovation. Here’s the full report.

I’ll pull out some particularly interesting information. First up, the expected impact of AI across different situations. ‘Job opportunties’ seems especially important; people can see that AI will be displacing a lot of workers.

Secondly, the greatest risks from deploying AI. Again, job loss is really salient to the general public. Politicians will have to start talking about this seriously soon. But the public are also really worried about loss of control risks.

The more public polling we see, the more I’m convinced that most people are on the same side on AI. There really isn’t that much daylight between what the general public want and what AI safetyists want, or between what Labour are proposing and what the Tories are proposing. Alignment remains a intractable sociotechnical problem, but in some sense we’re all united against the same threats here.

This is anthropocentric and so doesn’t go far enough. AI shouldn’t be human-centric but should be aligned with the interests and/or welfare of all sentient beings, including animals. I’m glad that DeepMind’s Iason Gabriel recognises this!

As has the US. Don’t worry: I’m workshopping ACDC/AISI, DC puns.

As motivation for mandatory safety testing, Kyle specifically cited Biden’s “sweeping executive order which puts requirements on the largest labs to report on the work they are doing,” and the fact that others, “including China and the EU," were “also taking action to put binding rules in place.” This is the Brussels effect in action: the race for AI safety is on.

The motion was controversial in its time. I don’t know how many AI safety advocates would support unilateral nuclear disarmament and I suspect many readers can suggest ways in which nuclear weapons are disanalogous to AI. My intention isn’t to get you to support the CND, but to spotlight a historical moment of Labour thinking about existential risk.